Scheduling is one of the main aspects of resource management in Apache Hadoop YARN. The Scheduler allocates resources to running applications based on their requirements. The Scheduler has a pluggable policy plug-in, and CapacityScheduler is an example of such a plug-in. The capacity scheduler enables multiple tenants to share a large cluster by allocating resources to their applications in a fair and timely manner. Each organization is guaranteed some capacity, and organizations are able to access any capacity that is not being used by other organizations.

A job is submitted to a queue. Queues are set up by administrators and have defined resource capacity. CapacityScheduler supports hierarchical queues, which are designed to enable the sharing of available resources among applications from a particular organization.

Queues use access control lists (ACLs) to determine which users can submit applications to them. This blog post shows you how to manage queue access with ACLs by using CapacityScheduler. Examples show how users and groups can be mapped to specific queues.

Enabling the YARN queue ACL

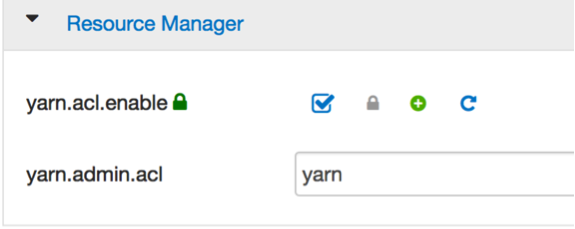

To control user access to CapacityScheduler queues, the yarn.acl.enable property must be enabled in yarn-site.xml. By default, the yarn.admin.acl property defaults to all users (*), who can administer all queues. In these examples, user yarn is the administrator of queue root and its descendent queues.

yarn.acl.enable=true yarn.admin.acl=yarn You can also set these properties through the Ambari web console (Figure 1).

Figure 1: Setting YARN ACLs with Ambari

Note: You must enable yarn.acl.enable for ACLs to work correctly, and you must not leave yarn.admin.acl empty. The default value (*, from yarn-default.xml) lets all users administer all queues, even the ones that they don’t own.

CapacityScheduler

CapacityScheduler enables you to create queues and subqueues, each with different access and usage. Initially, there is one root queue and a default subqueue that is identified as root.default. Queues are configured in the /etc/hadoop/0/capacity-scheduler.xml file.

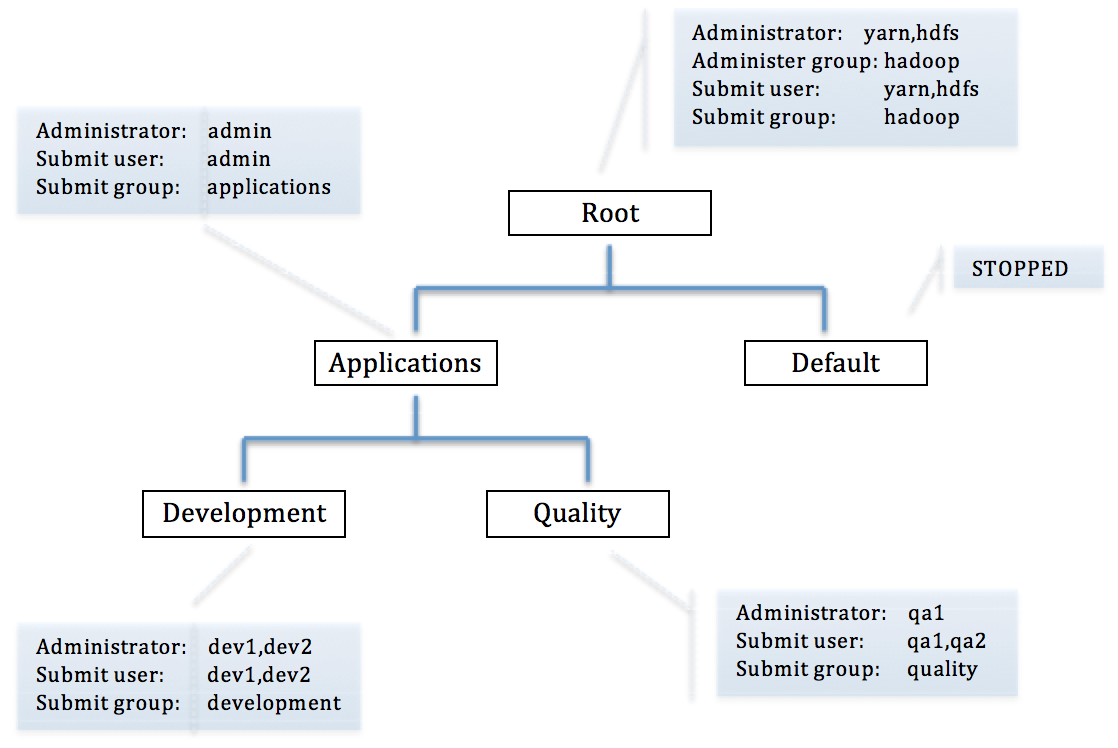

The example in Figure 2 shows the root queue with two subqueues, Applications and Default. The Applications queue itself has two subqueues, Development and Quality.

Queues have independent controls for who can administer and who can submit jobs. The administrator can submit, access, or kill a job, whereas a submitter can submit or access a job. These actions are controlled by the following YARN properties:

- acl_administer_queue

- acl_submit_applications

The following system users and groups are used in this example:

users | group ------------------------------- yarn,hdfs | hadoop admin | applications dev1,dev2 | development qa1,qa2 | quality Figure 2: Hierarchical overview of system users, groups, and queues

Inheritance rules

There are several important inheritance rules to understand. Child queues inherit the permissions of their parent queue list, and by default, this is configured with *. Therefore, if the list is not restricted at the root queue, all users might still be able to run jobs on any queue. For example, suppose that queue root.Applications was configured as shown in the following example:

acl_administer_queue=* acl_submit_applications=* In this example, all child queues, no matter how they are configured, will inherit the properties of their parent and be able to both administer and submit to any subqueue of root.Applications.

CapacityScheduler properties

To control the administrator and submitter for queues in the example in Figure 2, the properties in the capacity-schedular.xml file have been modified for queues root, root.Applications, root.Applications.Development, root.Applications.Quality, and root.default.

Queue properties: root

####################################################################################### # Users yarn,hdfs and group hadoop can submit,access,kill applications in the root # queue and all descendant queues (Applications,Development,Quality) by setting # acl_administer_queue=yarn,hdfs hadoop # Users yarn,hdfs and group hadoop can submit applications in the root # queue and all descendant queues (Applications,Development,Quality) by setting # acl_submit_applications=yarn,hdfs hadoop ####################################################################################### yarn.scheduler.capacity.root.acl_administer_queue=yarn,hdfs hadoop yarn.scheduler.capacity.root.acl_submit_applications=yarn,hdfs hadoop yarn.scheduler.capacity.root.capacity=100 yarn.scheduler.capacity.root.queues=Applications,default yarn.scheduler.capacity.root.accessible-node-labels=*

Queue properties: root.Applications

####################################################################################### # Only user admin can ADMINISTER (submit,access,kill) jobs in Applications queue # by setting acl_administer_queue=admin # User admin belonging to the applications group can SUBMIT applications by setting # acl_submit_applications=admin applications ####################################################################################### yarn.scheduler.capacity.root.Applications.acl_administer_queue=admin yarn.scheduler.capacity.root.Applications.acl_submit_applications=admin applications yarn.scheduler.capacity.root.Applications.minimum-user-limit-percent=100 yarn.scheduler.capacity.root.Applications.maximum-capacity=100 yarn.scheduler.capacity.root.Applications.user-limit-factor=1 yarn.scheduler.capacity.root.Applications.state=RUNNING yarn.scheduler.capacity.root.Applications.capacity=100 yarn.scheduler.capacity.root.Applications.queues=Development,Quality yarn.scheduler.capacity.root.Applications.ordering-policy=fifo

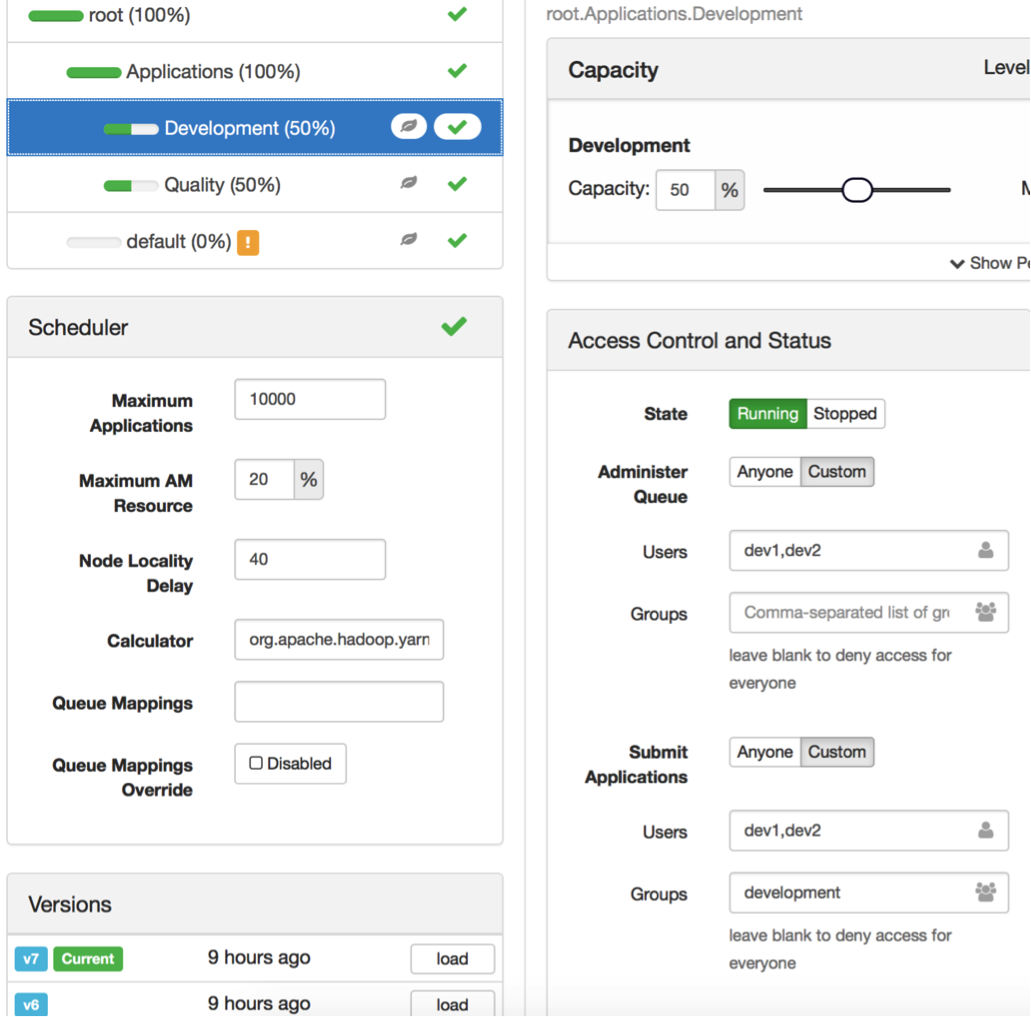

Queue properties: root.Applications.Development

####################################################################################### # Only users dev1,dev2 can ADMINISTER (submit,access,kill) jobs in Development # queue by setting acl_administer_queue=dev1,dev2 # Users dev1,dev2 and the development group can SUBMIT an application to # Development queue by setting acl_submit_applications=dev1,dev2 development ####################################################################################### yarn.scheduler.capacity.root.Applications.Development.acl_administer_queue=dev1,dev2 yarn.scheduler.capacity.root.Applications.Development.acl_submit_applications=dev1,dev2 development yarn.scheduler.capacity.root.Applications.Development.minimum-user-limit-percent=100 yarn.scheduler.capacity.root.Applications.Development.maximum-capacity=100 yarn.scheduler.capacity.root.Applications.Development.user-limit-factor=1 yarn.scheduler.capacity.root.Applications.Development.state=RUNNING yarn.scheduler.capacity.root.Applications.Development.capacity=50 yarn.scheduler.capacity.root.Applications.Development.ordering-policy=fifo

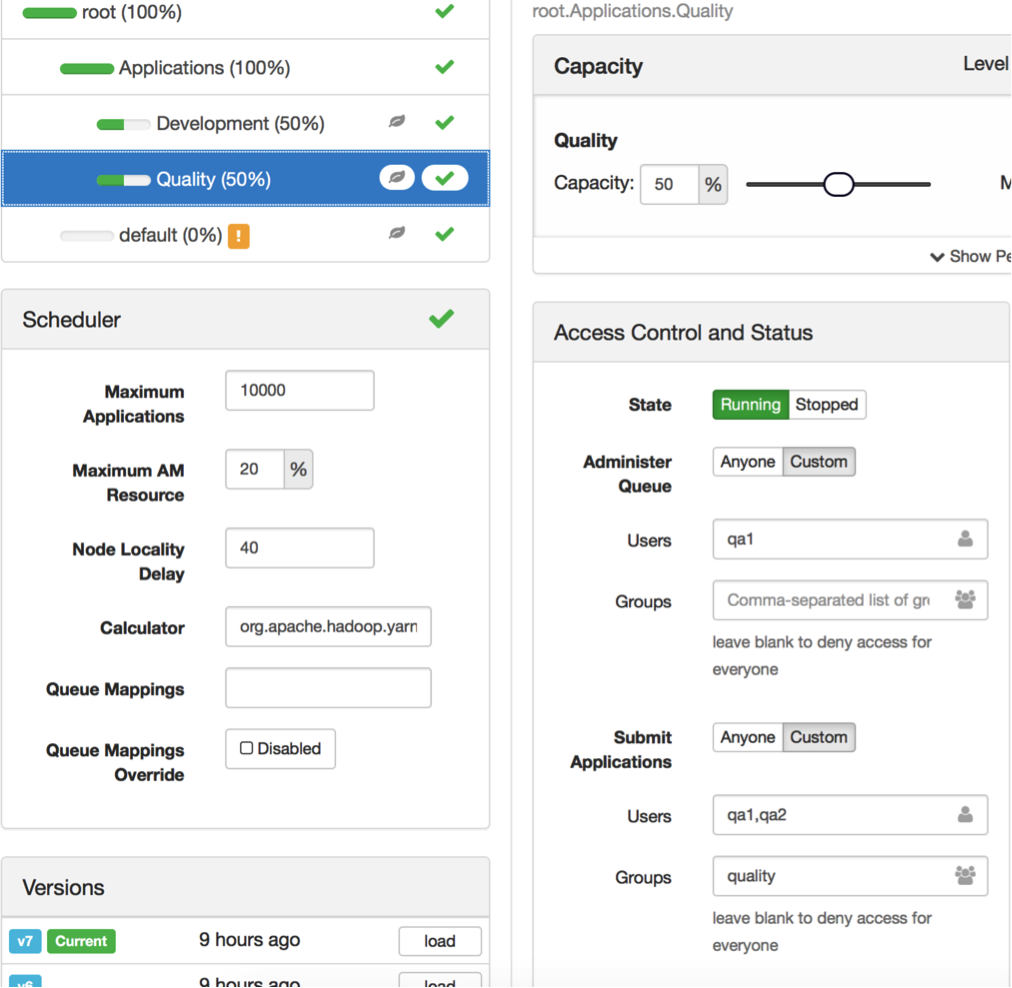

Queue properties: root.Applications.Quality

####################################################################################### # Only user qa1 can ADMINISTER (submit,access,kill) the Quality queue # by setting acl_administer_queue=qa1 # Only users qa1,qa2 can SUBMIT an application by setting ####################################################################################### acl_submit_applications=qa1,qa2 quality yarn.scheduler.capacity.root.Applications.Quality.acl_administer_queue=qa1 yarn.scheduler.capacity.root.Applications.Quality.acl_submit_applications=qa1,qa2 quality yarn.scheduler.capacity.root.Applications.Quality.minimum-user-limit-percent=100 yarn.scheduler.capacity.root.Applications.Quality.maximum-capacity=52 yarn.scheduler.capacity.root.Applications.Quality.user-limit-factor=1 yarn.scheduler.capacity.root.Applications.Quality.state=RUNNING yarn.scheduler.capacity.root.Applications.Quality.capacity=50 yarn.scheduler.capacity.root.Applications.Quality.ordering-policy=fifo

Queue properties: root.default

####################################################################################### # Disabled the default qutue by putting state in STOPPED ####################################################################################### yarn.scheduler.capacity.root.default.acl_submit_applications=* yarn.scheduler.capacity.root.default.maximum-capacity=100 yarn.scheduler.capacity.root.default.user-limit-factor=1 yarn.scheduler.capacity.root.default.state=STOPPED yarn.scheduler.capacity.root.default.capacity=0

Managing queues with the Ambari YARN Queue Manager

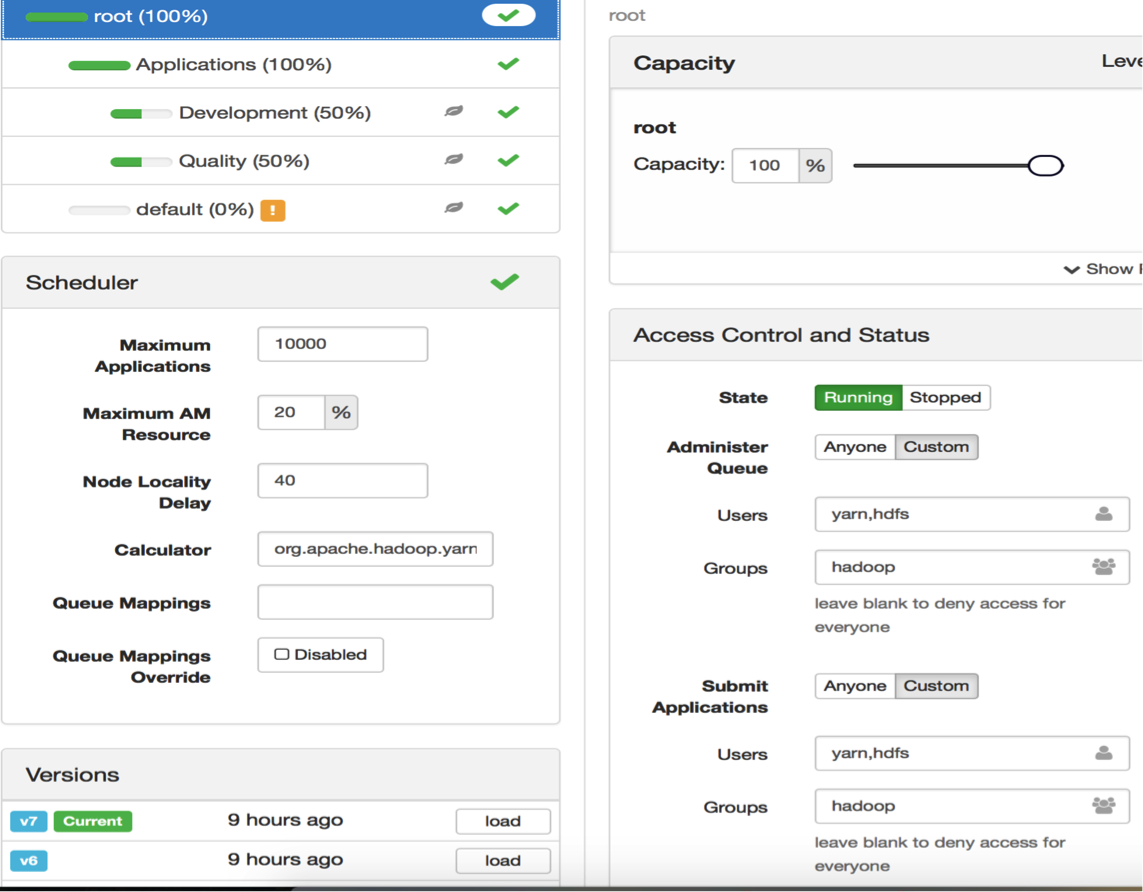

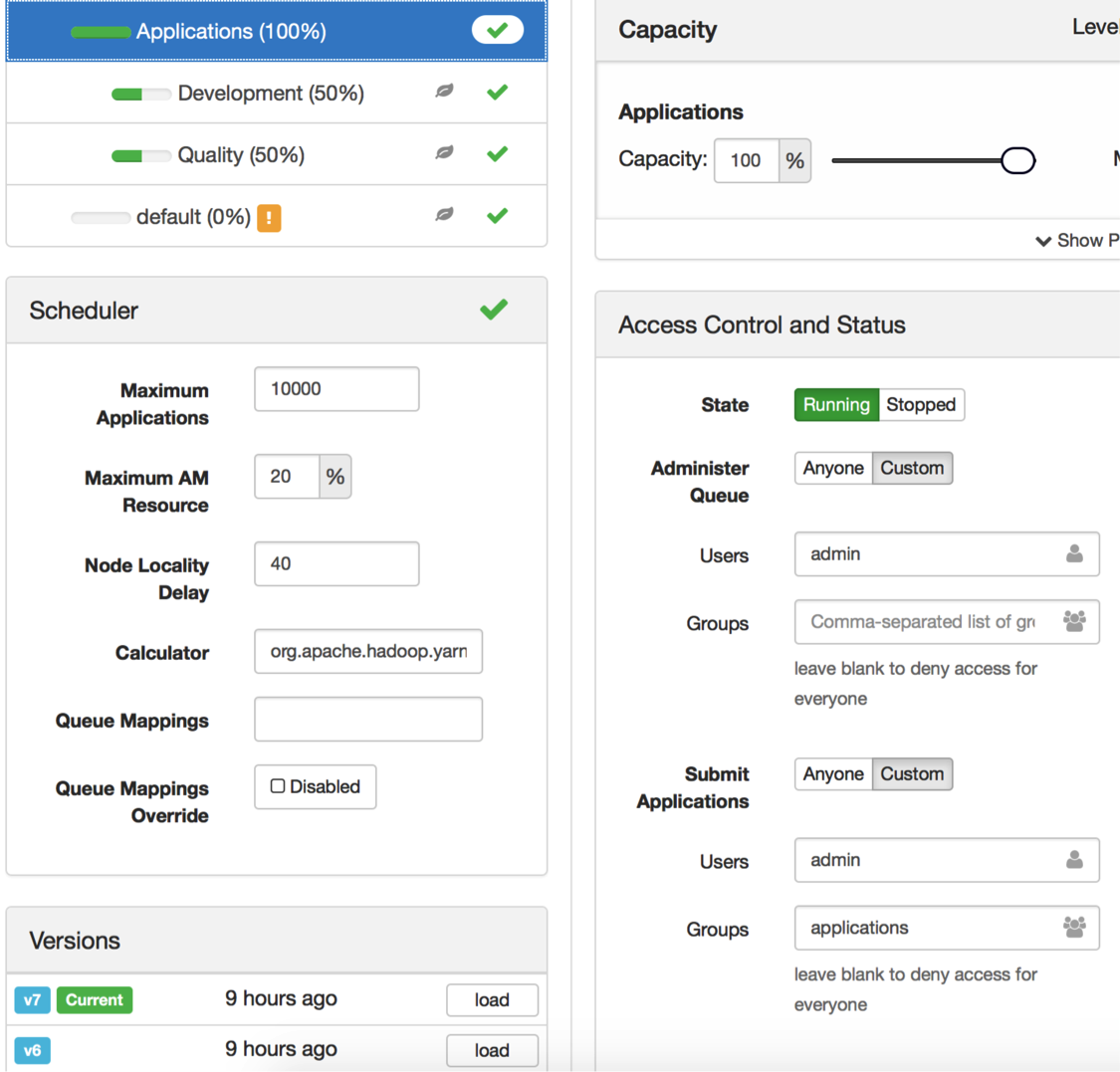

You can also manage these configurations graphically by using the YARN Queue Manager (Figures 3 – 6). This approach has the advantage of alerting you to any improper configurations.

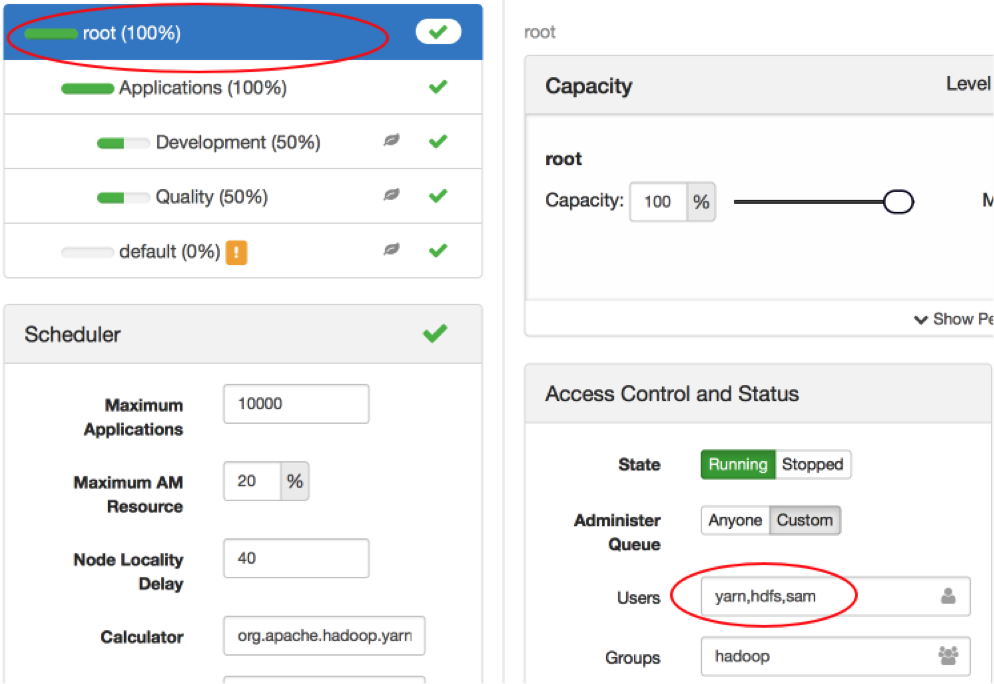

Figure 3: YARN Queue Manager view of the root queue

Figure 4: YARN Queue Manager view of the Applications queue

Figure 5: YARN Queue Manager view of the Development queue

Figure 6: YARN Queue Manager view of the Quality queue

Refreshing queues

After you have configured the queues and saved the changes to the capacity-scheduler.xml file, you will need to refresh the queues. To do so, run the following command:

sudo -u yarn yarn rmadmin -refreshQueues After the queues have been refreshed, verify the changes by running the following command:

mapred queue -list Output: ====================== Queue Name : Applications Queue State : running Scheduling Info : Capacity: 100.0, MaximumCapacity: 100.0, CurrentCapacity: 0.0 ====================== Queue Name : Development Queue State : running Scheduling Info : Capacity: 50.0, MaximumCapacity: 50.0, CurrentCapacity: 0.0 ====================== Queue Name : Quality Queue State : running Scheduling Info : Capacity: 50.0, MaximumCapacity: 50.0, CurrentCapacity: 0.0 ====================== Queue Name : default Queue State : stopped Scheduling Info : Capacity: 0.0, MaximumCapacity: 0.0, CurrentCapacity: 0.0 Reviewing user ACLs

Display the operations that each user can do for each queue by running the following command. The operations will be either ADMINISTER_QUEUE or SUBMIT_APPLICATIONS, which map to the properties acl_administer_queue and acl_submit_applications.

sudo -u yarn mapred queue -showacls Output: Queue acls for user : yarn Queue Operations ===================== root ADMINISTER_QUEUE,SUBMIT_APPLICATIONS Applications ADMINISTER_QUEUE,SUBMIT_APPLICATIONS Development ADMINISTER_QUEUE,SUBMIT_APPLICATIONS Quality ADMINISTER_QUEUE,SUBMIT_APPLICATIONS default ADMINISTER_QUEUE,SUBMIT_APPLICATIONS sudo -u hdfs mapred queue -showacls Output: Queue acls for user : hdfs Queue Operations ===================== root ADMINISTER_QUEUE,SUBMIT_APPLICATIONS Applications ADMINISTER_QUEUE,SUBMIT_APPLICATIONS Development ADMINISTER_QUEUE,SUBMIT_APPLICATIONS Quality ADMINISTER_QUEUE,SUBMIT_APPLICATIONS default ADMINISTER_QUEUE,SUBMIT_APPLICATIONS sudo -u admin mapred queue -showacls Output: Queue acls for user : admin Queue Operations ===================== root Applications ADMINISTER_QUEUE,SUBMIT_APPLICATIONS Development ADMINISTER_QUEUE,SUBMIT_APPLICATIONS Quality ADMINISTER_QUEUE,SUBMIT_APPLICATIONS default SUBMIT_APPLICATIONS sudo -u dev1 mapred queue -showacls Output: Queue acls for user : dev1 Queue Operations ===================== root Applications Development ADMINISTER_QUEUE,SUBMIT_APPLICATIONS Quality default SUBMIT_APPLICATIONS sudo -u dev2 mapred queue -showacls Output: Queue acls for user : dev2 Queue Operations ===================== root Applications Development ADMINISTER_QUEUE,SUBMIT_APPLICATIONS Quality default SUBMIT_APPLICATIONS sudo -u qa1 mapred queue -showacls Output: Queue acls for user : qa1 Queue Operations ===================== root Applications Development Quality ADMINISTER_QUEUE,SUBMIT_APPLICATIONS default SUBMIT_APPLICATIONS sudo -u qa2 mapred queue -showacls Output: Queue acls for user : qa2 Queue Operations ===================== root Applications Development Quality SUBMIT_APPLICATIONS default SUBMIT_APPLICATIONS Submitting a job to a queue

Because the default queue is STOPPED, submitting a job requires you to identify the queue to which the job must be submitted. Otherwise, the following error is returned:

Failed to submit application…Queue root.default is STOPPED. Cannot accept submission…. To submit a job to a specific queue, use the mapreduce.job.queuename property. For example:

mapreduce.job.queuename=Development When you have determined the queue to which you want to submit a job, review the users who can submit jobs to the different queues. In our examples, the Development queue was configured with the following settings:

acl_administer_queue=dev1,dev2 acl_submit_applications=dev1,dev2 development Users dev1 and dev2 and anyone belonging to the development group can submit to the Development queue, but only dev1 and dev2 can kill an application. They are the administrators, because no group is configured for administration.

To submit a job as dev1 to the Development queue, replace ${IOP_version} with the current version or * if only one version is installed. Before you can submit a job as user dev1, there must be a directory for this user with the correct permissions and ownership in the HDFS. For example:

sudo -u hdfs hdfs dfs -mkdir /user/dev1 sudo -u hdfs hdfs dfs -chown dev1:development /user/dev1 sudo -u hdfs hdfs dfs -ls /user sudo -u dev1 yarn jar /usr/iop/${IOP_version}/hadoop-mapreduce/hadoop-mapreduce-examples.jar pi -D mapreduce.job.queuename=Development 16 1000 If you submit a job to the Development queue as user qa1 (not one of the configured users), a submission error is returned, which indicates that the ACLs are operational.

sudo -u hdfs hdfs dfs -mkdir /user/qa1 sudo -u hdfs hdfs dfs -chown qa1:quality /user/qa1 sudo -u hdfs hdfs dfs -ls /user sudo -u qa1 yarn jar /usr/iop/${IOP_version}/hadoop-mapreduce/hadoop-mapreduce-examples.jar pi -D mapreduce.job.queuename=Development 16 1000 Output error: AccessControlException: User qa1 does not have permission to submit application_1496729127210_0002 to queue Development Moreover, inheritance rules for the root queue are configured for users yarn, hdfs, and group hadoop, to which qa1 does not belong:

root.acl_administer_queue=yarn,hdfs hadoop root.acl_submit_applications=yarn,hdfs hadoop root.Applications.Development.acl_administer_queue=dev1,dev2 root.Applications.Development.acl_submit_applications=dev1,dev2 development Users yarn and hdfs can successfully submit to the Development queue because the inheritance rules allow it.

It is important for a user such as yarn to be able to write to the /user/yarn/ directory; otherwise, an Access Control Exception is returned:

AccessControlException: Permission denied: user=yarn, access=WRITE, inode="/user/yarn/QuasiMonteCarlo_1484075183854_1546198490/in":hdfs:hdfs:drwxr-xr-x However, user yarn does not belong to group hdfs, which is required to write to the hdfs:/user/yarn/ directory.

id yarn Output: uid=1014(yarn) gid=1002(hadoop) groups=1002(hadoop) On the other hand, user admin’s primary group is hdfs, and admin can read and execute in directories that are owned by user hdfs.

id admin Output: uid=1019(admin) gid=1004(hdfs) groups=1004(hdfs),1002(hadoop) A detailed discussion of user permissions and group membership is beyond the scope of this article, but they do play a role in the ACLs that are available for queues. If you want to add user yarn to the hdfs group, you can perform a user modification, as shown in the following example:

usermod -a -G hdfs yarn id yarn Output: uid=1005(yarn) gid=1001(hadoop) groups=1001(hadoop),1003(hdfs) If you want to change the primary group for a user such as admin, you can do so by using system commands. For example:

usermod -g hdfs yarn id yarn Output: uid=1005(yarn) gid=1003(hdfs) groups=1003(hdfs),1001(hadoop) For more information, see Creating HDFS user directories and setting permissions.

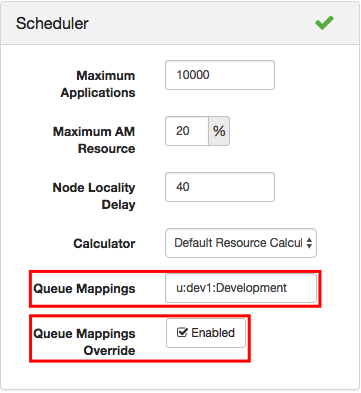

Overriding default queue mappings

If you want to override the default queues, you can enable the default queue mappings configuration to change the queues to which applications are submitted by default. This feature is not enabled by default, and if we enable it for user dev1 and the Development queue, there would no longer be the need to specify the queue to which a job should be submitted. Recall that in our example, we set the mapreduce.job.queuename property to Development.

To enable queue mapping, you need to set two properties that are disabled by default:

yarn.scheduler.capacity.queue-mappings-override.enable=true yarn.scheduler.capacity.queue-mappings=u:dev1:Development User dev1 is now mapped for default submission to the Development queue.

sudo -u dev1 yarn jar /usr/iop/${IOP_version}/hadoop-mapreduce/hadoop-mapreduce-examples.jar pi 16 1000It is important to remember that if queue mappings are enabled and configured, they will override user-specified values. This is useful for administrators who might want to control where applications are submitted, regardless of what users might have specified.

You can also use the YARN Queue Manager to manage queue mappings.

Figure 7: Setting queue mappings with the YARN Queue Manager

If you want to explore more advanced queue mappings, see Queue Administration & Permissions.

Killing a job on a particular queue

The administrator of a queue has the authority to kill jobs in the queue. This is configured through the acl_administer_queue property. For example:

acl_administer_queue=dev1,dev2 developmentLet’s modify the previous pi example to allow it to run longer so that another terminal can be opened to kill the longer running job:

sudo -u dev1 yarn jar /usr/iop/${IOP_version}/hadoop-mapreduce/hadoop-mapreduce-examples.jar pi -D mapreduce.job.queuename=Development 16 1000000 In a second terminal, try to kill the application as user qa1, who is not configured as an administer of the Development queue. Begin by listing all the running jobs, then run the kill command against the running application that is identified by the Application-Id.

yarn application -list Output: Total number of applications (application-types: [] and states: [SUBMITTED, ACCEPTED, RUNNING]):1 Application-Id Application-Name Application-Type User Queue State Final-State Progress Tracking-URL application_1484086427510_0001 QuasiMonteCarlo MAPREDUCE dev1 Development ACCEPTED UNDEFINED 0% N/A sudo -u qa1 yarn application -kill application_1484086427510_0001 Output: AccessControlException: User qa1 cannot perform operation MODIFY_APP on application_1484086427510_0001 User qa1 is not an administrator and is not permitted to kill a job on the Development queue. If you run the kill command as user dev1 or admin, the operation succeeds. User dev1 is configured as an administrator, and user admin is an administrator of the Development queue through inheritance.

sudo -u dev1 yarn application -kill application_1484086427510_0001 Output: Killed application application_1484086427510_0002 sudo -u admin yarn application -kill application_1484086427510_0001 Output: Killed application application_1484086427510_0003 Configuring the Resource Manager UI with KNOX and LDAP

Up to this point, the discussion has focused on managing queues by using ACLs and accessing jobs from the command line. You can also configure the demo LDAP server that comes with IOP to allow system users to log in and interact with the Resource Manager UI though KNOX.

First, ensure that the demo LDAP server is not running (Ambari > Knox > Service Actions > Stop Demo LDAP). Then, edit the LDAP configuration through Ambari to add users to the server. The configuration file is located on the host at /etc/knox/conf/users.ldif. It is a good idea to use Ambari to manage these changes (Ambari > Knox > Configs > Advanced users-ldif); otherwise, they are lost on restart of the demo LDAP server.

Add users and passwords for admin, dev1, dev2, qa1, and qa2 by appending the following content to users-ldif in Ambari. Click Save and follow the on-screen instructions.

# entry for sample user admin dn: uid=admin,ou=people,dc=hadoop,dc=apache,dc=org objectclass:top objectclass:person objectclass:organizationalPerson objectclass:inetOrgPerson cn: admin sn: admin uid: admin userPassword:admin-password # entry for sample user dev1 dn: uid=dev1,ou=people,dc=hadoop,dc=apache,dc=org objectclass:top objectclass:person objectclass:organizationalPerson objectclass:inetOrgPerson cn: dev1 sn: dev1 uid: dev1 userPassword:dev1-password # entry for sample user dev2 dn: uid=dev2,ou=people,dc=hadoop,dc=apache,dc=org objectclass:top objectclass:person objectclass:organizationalPerson objectclass:inetOrgPerson cn: dev2 sn: dev2 uid: dev2 userPassword:dev2-password # entry for sample user qa1 dn: uid=qa1,ou=people,dc=hadoop,dc=apache,dc=org objectclass:top objectclass:person objectclass:organizationalPerson objectclass:inetOrgPerson cn: qa1 sn: qa1 uid: qa1 userPassword:qa1-password # entry for sample user qa2 dn: uid=qa2,ou=people,dc=hadoop,dc=apache,dc=org objectclass:top objectclass:person objectclass:organizationalPerson objectclass:inetOrgPerson cn: qa2 sn: qa2 uid: qa2 userPassword:qa2-password Then, start the demo LDAP server to enable the new users Ambari > Knox > Service Actions > Start Demo LDAP. To check whether the server is running, run the following code:

ps -ef |grep ldap root 3241 13147 0 18:10 pts/0 00:00:00 su - knox -c java -jar /usr/iop/current/knox-server/bin/ldap.jar /usr/iop/current/knox-server/conf knox 3242 3241 0 18:10 ? 00:00:07 java -jar /usr/iop/current/knox-server/bin/ldap.jar /usr/iop/current/knox-server/conf The users who manage queues (admin, dev1, dev2, qa1, and qa2) are now mapped to the demo LDAP server. This gives those users access to the Resource Manager UI (Ambari > YARN > Quick Links > ResourceManager UI), where they can view, administer, or kill jobs. Users who want to view running jobs in the Resource Manager UI are prompted for a user ID and password; these credentials are specified in users-ldif: choose one of them for the login.

Tip: When you log in to the Resource Manager UI, use your browser in private mode so that no cookies are saved. This UI has no logout capability, and you would have to delete cookies to change users.

Accessing the Resource Manager UI

Using the YARN Queue Manager (Figure 8), let’s add user sam as an administrator of the root queue. User sam is configured for the demo LDAP server.

Figure 8: User sam’s queue access control

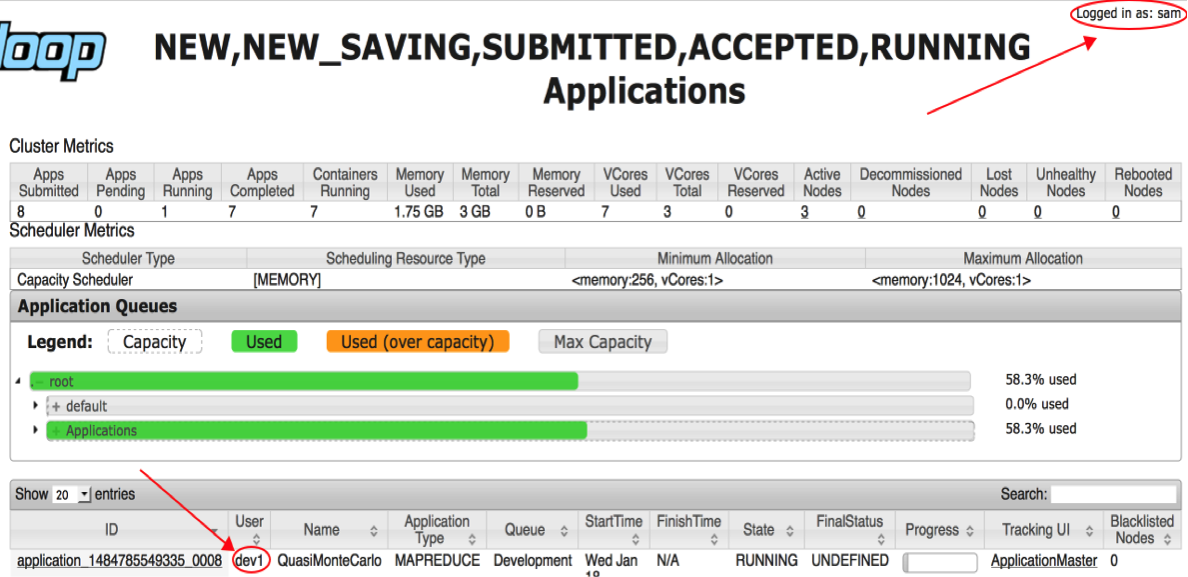

Recall that queues have hierarchical inheritance. User sam will be able to administer any subqueue of root, which means that sam can submit, view, or kill any job, including jobs submitted by user dev1 (Figure 9).

Figure 9: User sam demonstrating administrator privileges

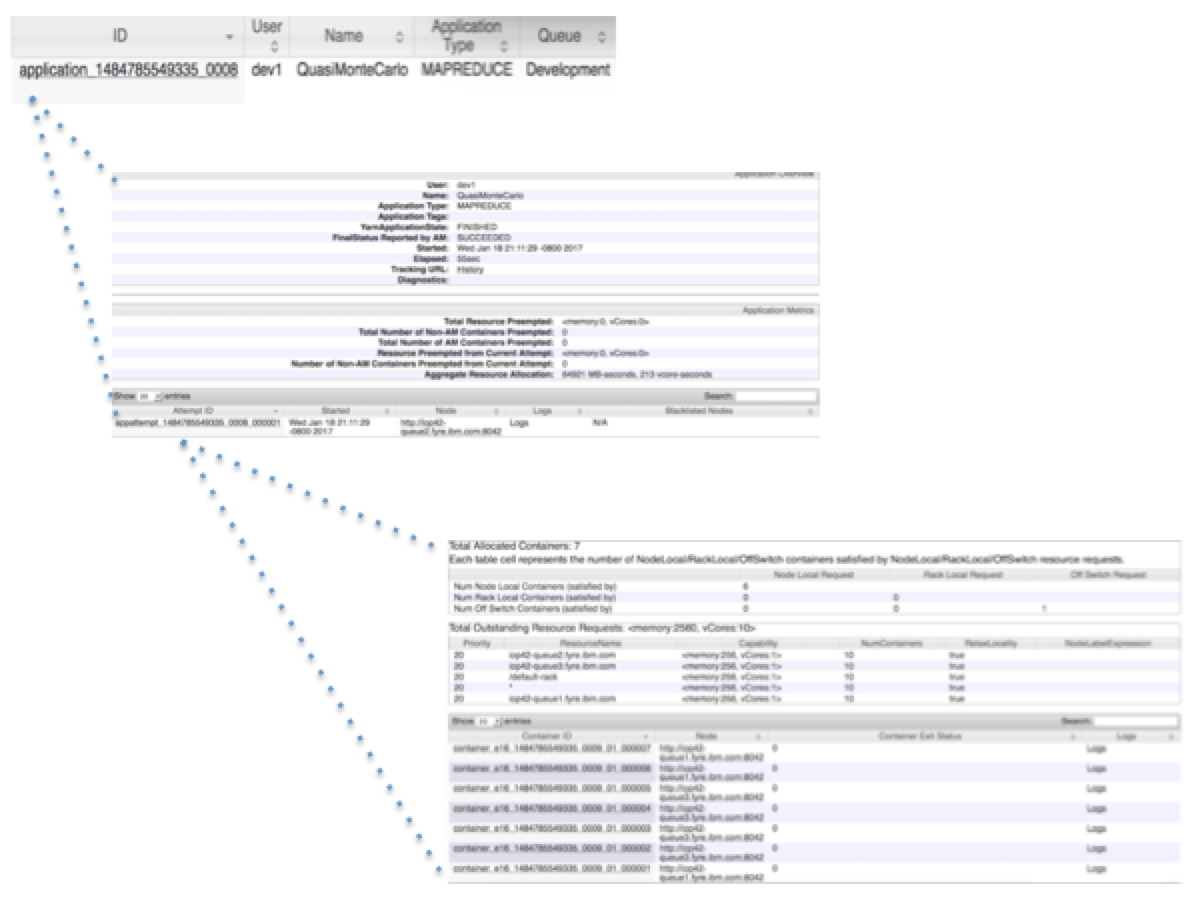

User sam can click the application ID link to view the application details (Figure 10).

Figure 10: Application details

Access and denial in the Resource Manager UI

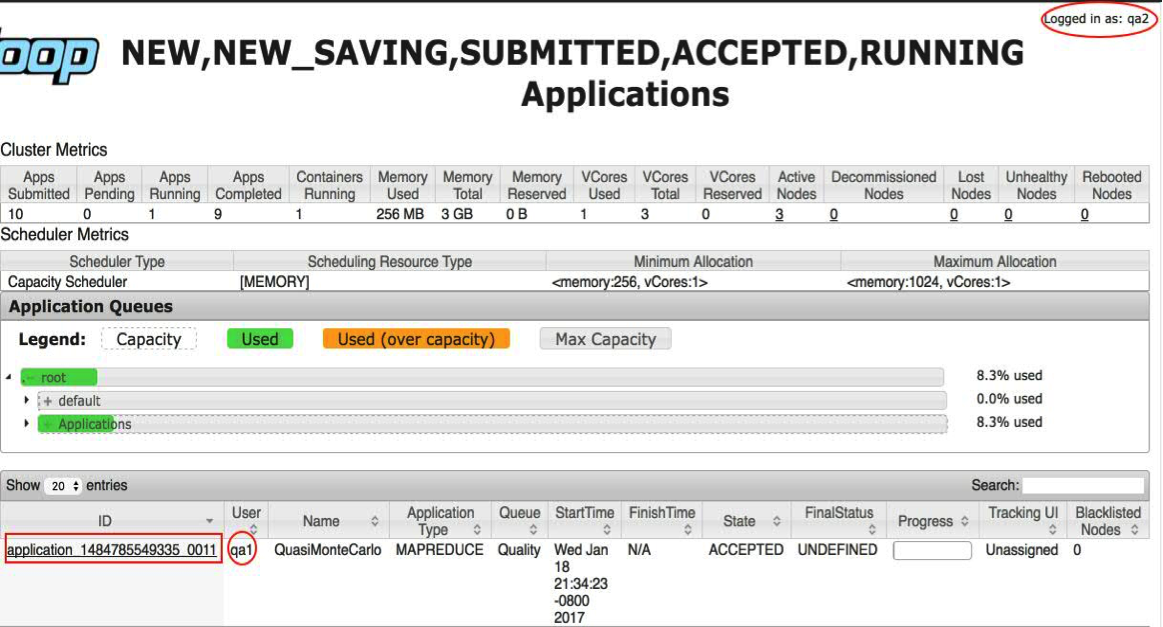

Recall that in our example, the Quality queue has one administrator (qa1) and two job submission users (qa1 and qa2, Figure 11).

Figure 11: Quality queue administrator and submitters

Figures 12 and 13 demonstrate access and denial. User qa1 has submitted an application and user qa2 is logged into the Resource Manager UI. User qa2 can view the active jobs in the scheduler, but when user qa2 tries to access the job that was submitted by qa1, an error is returned (Figure 13). User qa2 is not an administrator of the Quality queue and is not the user who submitted the job. You must be either the owner of the job or an administrator of the queue to access an application.

Figure 12: User qa2 is logged into the Resource Manager UI and can view the Quality queue

Figure 13: User qa2 is denied access to Quality queue job details in the Resource Manager UI

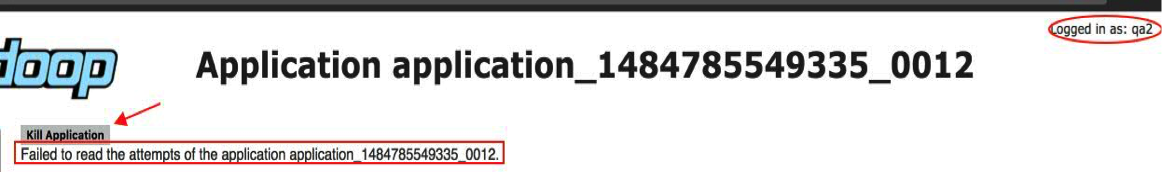

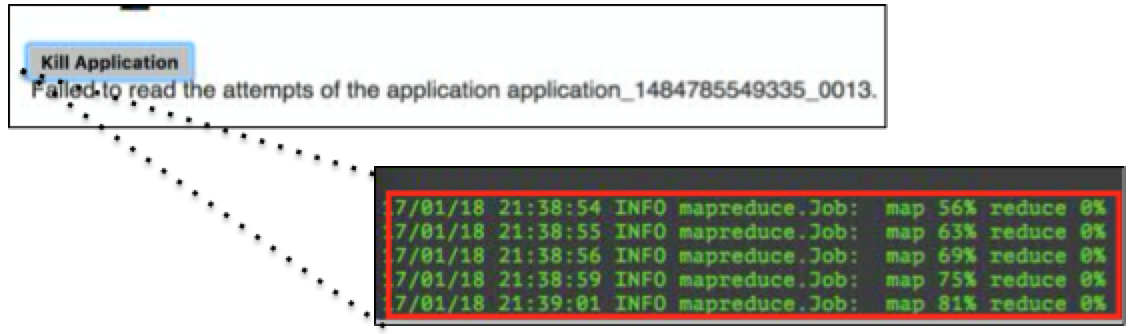

As you might expect, user qa2 cannot kill qa1’s job either (Figure 14). Clicking the Kill Application button has no effect.

Figure 14: A user who is not an administrator cannot kill a job in the Resource Manager UI

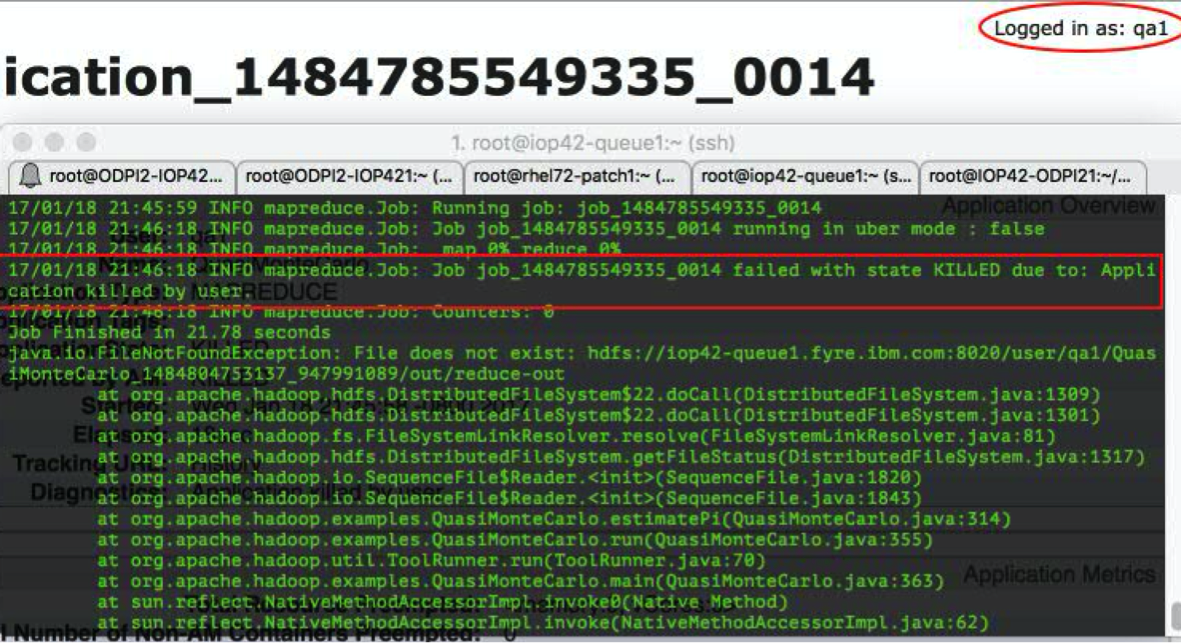

User qa1, who is an administrator, can successfully terminate the application (Figure 15).

Figure 15: An administrator can kill jobs in the Resource Manager UI

Creating HDFS user directories and setting permissions

When you run some of the example jobs in this document, the MapReduce application tries to write to the HDFS under /user/${uid}, and if the directories for uid are not defined, an HDFS error is returned:

org.apache.hadoop.security.AccessControlException: Permission denied: user=qa2, access=WRITE, inode="/user/qa2/QuasiMonteCarlo_1484811559730_783098269/in":qa1:quality:drwxr-xr-x And if the user does not exist, an error about the .staging folder is returned:

Caused by: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.AccessControlException): Permission denied: user=user1, access=WRITE, inode="/user/user1/.staging":hdfs:hdfs:drwxr-xr-x If you encounter such errors, create a directory and set the ownership correctly so that the MapReduce job can write to the HDFS. For example, suppose that you have the following directories and permissions:

sudo -u hdfs hdfs dfs -ls /user Output: Found 12 items drwxr-xr-x - admin hdfs 0 2017-01-18 15:51 /user/admin drwxrwx--- - ambari-qa hdfs 0 2017-01-04 11:34 /user/ambari-qa drwxr-xr-x - dev1 development 0 2017-01-18 21:16 /user/dev1 drwxr-xr-x - hbase hdfs 0 2017-01-04 11:29 /user/hbase drwxr-xr-x - hcat hdfs 0 2017-01-04 11:29 /user/hcat drwx------ - hdfs hdfs 0 2017-01-10 11:08 /user/hdfs drwx------ - hive hdfs 0 2017-01-04 11:29 /user/hive drwxrwxr-x - oozie hdfs 0 2017-01-04 11:28 /user/oozie drwxr-xr-x - qa1 quality 0 2017-01-18 23:33 /user/qa1 drwxr-xr-x - hdfs hdfs 0 2017-01-18 16:41 /user/sam drwxr-xr-x - spark hadoop 0 2017-01-04 11:27 /user/spark drwxrwxrwx - hdfs hdfs 0 2017-01-09 12:24 /user/words Create a directory for user qa2:

sudo -u hdfs hdfs dfs -mkdir /user/qa2 Set the ownership for /user/qa2:

sudo -u hdfs hdfs dfs -chown qa2:quality /user/qa2 The directory listing now includes /user/qa2:

sudo -u hdfs hdfs dfs -ls /user Output: Found 13 items drwxr-xr-x - admin hdfs 0 2017-01-18 15:51 /user/admin drwxrwx--- - ambari-qa hdfs 0 2017-01-04 11:34 /user/ambari-qa drwxr-xr-x - dev1 development 0 2017-01-18 21:16 /user/dev1 drwxr-xr-x - hbase hdfs 0 2017-01-04 11:29 /user/hbase drwxr-xr-x - hcat hdfs 0 2017-01-04 11:29 /user/hcat drwx------ - hdfs hdfs 0 2017-01-10 11:08 /user/hdfs drwx------ - hive hdfs 0 2017-01-04 11:29 /user/hive drwxrwxr-x - oozie hdfs 0 2017-01-04 11:28 /user/oozie drwxr-xr-x - qa1 quality 0 2017-01-18 23:33 /user/qa1 drwxr-xr-x - qa2 quality 0 2017-01-18 23:35 /user/qa2 drwxr-xr-x - hdfs hdfs 0 2017-01-18 16:41 /user/sam drwxr-xr-x - spark hadoop 0 2017-01-04 11:27 /user/spark drwxrwxrwx - hdfs hdfs 0 2017-01-09 12:24 /user/words Securing Hadoop web interfaces with Kerberos

This article has demonstrated queue ACLs by using a command line interface and accessing them through a web interface with Knox. If you want to control access to job output in the web UI, you must control access to the REST interfaces, which can be done with Kerberos. That topic is beyond the scope of this article, but you can find information about securing REST interfaces here.

Additional resources

You can find supporting documents, such as the list of commands to create users on the host, or details about CapacityScheduler configuration, in a GitHub repository.