STREAMING DATA THROUGH THE IBM COMMON DATA PROVIDER FOR Z

IBM Common Data Provider for z Systems enables a customer to gather data from many different data sources on a z/OS system and provides a single framework to forward that data to one or more remote receivers. This allows the data to be received off platform in a controlled and consumable fashion.

IBM Z Decision Support can be configured to stream curated data out through the IBM Common Data Provider for z. This includes both hourly aggregate records and timestamp level records. Streaming is enabled from either the new Continuous Collector or the older Batch Collector.

IBM Z Decision Support integration with IBM Common Data provider for z Systems[/caption]

By streaming the data through the

IBM Common Data Provider, you can copy selected

IBM Z Decision Support IBM Z Decision Support data into analytic solutions, such as Splunk and ELK, and then to leverage them to provide enhanced reporting on the data. To leverage this capability, IBM Z Decision Support is providing a subset of the Key Performance Metric reports as Splunk Dashboards.

PREPARING IBM Z DECISION SUPPORT TO STREAM DATA

GET IBM Z DECISION SUPPORT UP AND RUNNINGInstall IBM Z Decision Support and set it up to feed data into Db2. Refer to

documentation. Starting from a stable configuration makes the job of connecting it to the IBM Common Data Provider for Z a lot simpler.

GET IBM COMMON DATA PROVIDER FOR Z UP AND RUNNINGInstall the IBM Common Data Provider for Z and get it running. Refer to

documentationRUN THE DATA MAPPING UTILITYRun the new Data Mapping Utility provided with IBM Z Decision Support.

Configure the JCL that is shipped in DRL.SDRLCNTL(DRLJCDPS). The JCL runs three utilities against your active IBM Z Decision Support configuration in Db2.

• The first utility (DRLELSTT) creates a list of all the data tables in the database. This is used as input to the other two utilities to ensure they produce consistent output.

• The second utility (DRLEMTJS) creates a table.json file for every IBM Z Decision Support data table in Db2. Each file maps the layout of a specific data table. The table.json files are required by the Publication Data Mover.

• The third utility (DRLEMCDS) creates an izds.streams.json file, which contains the IBM Common Data Provider for z stream definitions for each IBM Z Decision Support data table in Db2. The izds.streams.json file is required by IBM Common Data Provider for z. It needs to be copied into the configuration folder for the IBM Common Data Provider for z User Interface.

Note: If, in the future, you apply maintenance or customization to your IBM Z Decision Support Db2 database which result in database schema changes, you must rerun your customized DRLJCDPS job to create new data table mappings. Failure to do so will result in the streamed data not being in the format that the Data Mover is expecting it in, which can lead to incomplete or incorrect conversion to JSON.

STREAMING DATA FROM IBM Z DECISION SUPPORTThe mechanism for streaming data out of IBM Z Decision Support requires some setup.

Complete the following steps:

1. Allocate a new publication logstream to hold the streamed data.

2. Create a new Data Mover instance – a Publisher. This instance will take the data from the publication logstream, convert it to JSON and send it to the IBM Common Data Provider for z.

3. Configure one or more IBM Z Decision Support collectors to stream data.

4. Configure the IBM Common Data Provider for Z to stream the data to the correct subscribers.

Once all of these steps have been completed, data will flow from IBM Z Decision Support to the subscribers via the IBM Common Data provider for z.

ALLOCATING THE PUBLICATION LOGSTREAMRefer to the following

link for instructions to set up a log stream:

A publication log stream is required for every system that is running an instance of the IBM Z Decision Support Collector.

CREATE A NEW DATA MOVER INSTANCEComplete the following steps to configure the Publication Data Mover to move the data from the Publication Log stream to the IBM Common Data Provider for z.

CREATE A NEW PUBLICATION DATA MOVER DIRECTORY Take a copy of the SMP/E installed IDSz/DataMover directory. This new directory will be the working directory for the Publication Data Mover:

cp -R IDSz/DataMover/* IZDS/publisher

CUSTOMIZE THE PUBLICATION DATA MOVER PROPERTIES Edit the Publication.properties file in the IZDS/publisher/config directory as follows:

• Change input.1.log stream to the newly created publication log stream name.

• Change process.1.1.encoding if your system uses a code page other than the default IBM-1047.

• Change output.1.1.port to the output port on the TCPIP stage that the IBM Common Data Provider for z Data Streamer is listening on.

COPY THE TABLE.JSON FILES (CREATED BY RUNNING SAMPLE DRLJCDPS ABOVE) INTO THE PUBLICATION DATA MOVER DIRECTORY

cp /u/your/output/directory/TableMaps/* IZDS/publisher/mappings

CREATE A NEW STARTED TASK FOR THE PUBLICATION DATA MOVERRefer to the following

link for instructions to set up a started task:

You need to ensure that the configuration name specified in the started task is set to Publisher and it has the IZDS/publisher directory as its working directory.

Once started, the Publication Data Mover will be set up to read data from the Publication Log stream, convert it into JSON and send it to the IBM Common Data Provider for z for distribution.

SELECT THE DATA TO STREAMFirst you need to decide which tables in your Db2 database you want to stream to a subscriber. Note that you can only stream tables – you cannot stream views.

It is strongly suggested that you stream hourly (or equivalent) aggregate data as this provides the best combination of low data volume and timeliness.

Note that, due to differences in rounding and computational algorithms, it is likely that data aggregated on the receiving platform (for example, Splunk or ELK), will not exactly match the higher level aggregates that IBM Z Decision Support creates in Db2. It is therefore recommended to only forward the lower level aggregate data (the hourly data) and to rely on the receiver’s aggregation capabilities for higher level views if they are required.

CONFIGURE THE IBM COMMON DATA PROVIDER FOR Z TO PROCESS AND DISTRIBUTE THE DATAYou need to edit your IBM Common Data Provider for Z configuration.

First, ensure you have a defined a subscriber for the platform that is going to receive the data.

Find the stream definition that corresponds to each table you want to stream table and add it to your configuration. The data will come in encoded with the character set that you specified in the JSON stage of the Data Mover configuration, so if you are sending it off platform you will need to convert it to a code page the subscriber can handle, such as UTF-8. Link the stream to the subscriber for the target platform.

Recycle your IBM Common Data Provider for z instance to pick up your new configuration. No data will flow, but IBM Common Data Provider for z will now be ready to forward the data when it arrives.

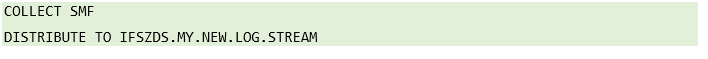

CONFIGURING THE COLLECTORA new parameter DISTRIBUTE on the COLLECT statement results in the streaming of data to the specified Publication Log stream.

Please note:

• All data tables which are sourced directly from log records (in other words, first-level aggregate data tables) are streamed when the DISTRIBUTE parameter is specified.

• Streaming occurs at the time when the inserted and updated rows are committed to the database.

• All streamed rows will be converted to JSON and presented to the IBM Common Data Provider for z Data Streamer. The IBM Common Data Provider for z Data Streamer will discard any records for which it does not have a subscriber.

• The DISTRIBUTE parameter can only be used after all configuration steps described in this chapter are completed.

Refer to the IBM Z Decision Support Language Guide and Reference for the syntax of the DISTRIBUTE parameter on the COLLECT statement.

THE PUBLICATION CYCLE

CONTINUOUS COLLECTORIf you are using the BIM Z Decision Support Continuous Collector, data will get written to the log stream throughout the day. Once this data has been sent down to the remote receiver (this can take a few minutes), it will be available for your subscribed application to report on. You will typically see the dashboard records get updated no more than once an hour (for data in an hourly table). You should not expect timestamp records to be updated significantly more often.

BATCH COLLECTORIf you are using the Batch Collector, then Data will be written out at the end of the batch run or, if it runs out of buffer space, at multiple points during the batch run. Typically, for an overnight batch run against an hourly table, you will get 24 rows of data written out by the end of the batch run, reflecting the data from the previous day. Once this data has been passed down to the receiver, it will be visible to the subscribed applications.

Note that in Batch, all the data gets written out at pretty much the same time (just before the end of the Batch run), so it may take a while to get forwarded. This will be especially true if you were forwarding timestamp records, as the batch run would trigger the transmission of the whole days’ worth of timestamp records (potentially a lot of records).

UPDATE RECORDS

Due to the nature of the continuous processing cycle, there are going to be times when data arrives at the continuous collector late – long after the aggregation period that it is supposed to be counted into has passed. When this happens, the Continuous Collector will find and update the correct aggregate records in Db2. If the record is in a table that is being published via The Common Data Provider for Z, a copy of the original record will have been published at the end of the aggregation period. The processing of the late data will cause the updated aggregate record to be republished as an update record.

For example:

• Records for a table are being sent to the Hub from SYS1 every 5 minutes

• An hourly aggregate is written out at 10:00 am

• At 10:45 am, a problem causes the flow of data from SYS1 to the Hub to stop.

• At 11:00 am, an aggregate record from the hour starting at 10am is written out – but this only contains data from 10:00 - 10:45am.

• At 11:30am the problem is fixed and the delayed data from SYS1 is sent through to the hub.

• At 12:00 noon, two aggregate records for SYS1 are published – the one for the hour starting at 11:00 am, and an updated version of the one for the hour starting at 10:00 am, this time containing the full set of data from 10:00 - 11:00am.

This means that the receiver may receive two aggregate records for the same aggregation period. It is the receiver’s responsibility to handle the update records correctly.

• There is no difference between the original record and the update record – except that the data in the update record is more complete.

• In the records JSON format there is an id string that is unique to each record (it’s built from the Db2 table key). If two records have the same id string then the newer one is an update of the older one.

• Elasticsearch will handle this automatically if you use the id field as the document id, creating a new version of the record.

• With Splunk you have to add a ‘dedup id’ step into your queries to remove any records for which there were updates.

Update records can also be generated if the Continuous Collector is short of buffer space and has to commit its aggregated data before the hour is up. This will lead to large numbers of unnecessary update records (all of which will be stored by the receiver) and an inefficient processing cycle for the Continuous Collector (the commit part of its processing is the most expensive). It is strongly recommended that you increase the memory available for the Continuous Collector’s buffer if it is doing this.